Note: NVIDIA AI Grid & Telco Edge Compute Opportunity

Summary

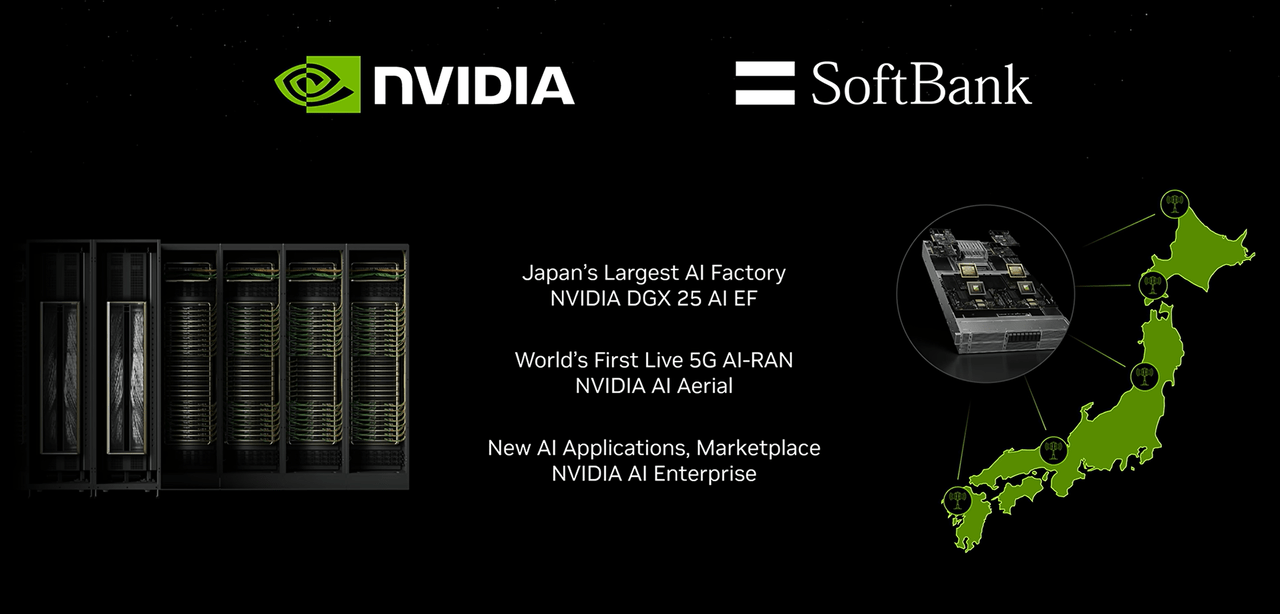

- NVDA's ambitious AI grid project in Japan, backed by Softbank, aims to solidify its leadership in edge AI inferencing and infrastructure solutions.

- Softbank's historical investments and strategic foresight, particularly in Yahoo Japan, Alibaba, and the iPhone, underscore its potential in leveraging NVDA's AI technologies.

- NVDA's AI Aerial RAN solution offers flexibility and adaptability for evolving AI workloads, presenting a significant market opportunity despite challenges in the telecommunications sector.

- Softbank's AI application marketplace initiative could expand NVDA's reach, potentially creating an AI platform akin to Apple's App Store, enhancing NVDA's competitive moat.

At the recent AI Summit Japan, NVIDIA (NVDA) CEO Jensen Huang revealed the company’s latest initiatives, including advancements in software 2.0, robotics, AI-powered factories, cellular base stations, and scaling inference for edge AI. While the event was widely covered for its technological implications, one moment stole the spotlight: Huang’s acknowledgment of Softbank’s Masayoshi Son, alongside the fascinating revelation of an undisclosed 2014 management buyout proposal of NVDA by Softbank.

The summit's primary focus, however, was NVDA’s ambitious plan to build an AI grid in Japan, solidifying its position as a leader in edge AI inferencing. Jensen's remarks highlighted Masayoshi Son’s unparalleled ability to spot transformative technologies long before they became mainstream. Examples abound — Son's early investment in Yahoo led to a lucrative joint venture, Yahoo Japan, which dominates the Japanese internet landscape to this day, despite being almost dead elsewhere. Even Google relies on Yahoo Japan for search functionalities in the region, underscoring Son's strategic foresight in navigating challenging markets.

Son’s prescience extended to e-commerce, where his $20m bet on Alibaba became one of Softbank's most celebrated investments, fueling ventures like the Vision Fund and the ARM acquisition. His knack for identifying disruptive opportunities continued with the iPhone: before Softbank even had a mobile carrier, Son pitched Apple’s Steve Jobs with a prototype vision for a mobile device. This bold move culminated in Softbank acquiring Vodafone Japan and securing exclusive rights to the iPhone in 2008, reshaping Japan's mobile market.

In 2014, Masayoshi Son embarked on a bold journey with the $100bn Vision Fund, making billions of dollars in direct investments in companies he believed had multibagger potential. Among these was NVDA, which Son recognized as being undervalued — investors at the time had not fully grasped the significance of CUDA and NVDA’s emerging dominance in AI. However, Jensen Huang resisted efforts to take NVDA private or merge it with Softbank. Instead, Softbank became NVDA’s largest shareholder by purchasing shares on the public market.

This position didn’t last. By 2019, mounting challenges in the Vision Fund, especially high-profile missteps like WeWork, forced Softbank to offload its NVDA stake. In hindsight, Son has expressed regret over selling NVDA too soon while retaining ARM. With NVDA now an AI powerhouse, Son has pivoted his strategy, aiming to build a partnership similar to his earlier successes with Yahoo Japan and the iPhone.

However, given NVDA’s dominant position, a joint venture, like Yahoo Japan, is no longer feasible. Instead, Softbank seeks to leverage its strengths in IT distribution, software, and telecommunications to act as a GTM enabler for NVDA’s infrastructure solutions. This arrangement would help introduce NVDA’s AI technologies to a broader user base, with Softbank presumably negotiating exclusive rights or priority access to key parts of the AI value chain in return.

The plan includes building five cloud AI data centers totaling 25 exaflops — presumably measured in FP8 precision, given Huang's historical references to such metrics. NVDA often highlights higher exaflop figures by utilizing FP8, where raw computational power is the same but the output is effectively doubled compared to FP16, due to FP8 needing only half the bits per operation. Consequently, 25 exaflops at FP8 is equivalent in performance to 12.5 exaflops at FP16.