Updates: Cloudflare - The Three S-Curves (Pt.3) - NET's Strengths & Challenges In Edge Compute & GenAI

Summary

- In Part 3 we compare NET to its edge compute rivals.

- We discuss the attractiveness of Prince's vision of NET becoming the Connectivity Cloud.

- We discuss NET's challenges in offering comparable IaaS services to the hyperscalers and the GenAI limitations.

- Companies discussed include AWS, Fastly, Akamai, Vercel, Netlify, and Edgio.

Act 3

Act 3 is NET’s edge compute, an area that is still very much in incubation mode, but promises substantial growth in the future as the 3rd S-Curve. While Act 3 is the most nascent area of NET’s business, it has a notable overlap with Act 1, and in many ways is an extension of Act 1. This is because the demand for edge compute has evolved, catalyzed by the limitations of CDN amid the rising need for enhanced digital consumer experiences.

In the early 2000s, the Internet was growing at an unprecedented rate, with websites and online applications becoming increasingly complex and resource-intensive. This surge in digital content led to the birth of CDNs. The primary role of CDNs was straightforward: to accelerate the delivery of web content by caching it closer to end-users. Companies like Akamai (AKAM) pioneered this space, revolutionizing the way content was distributed and consumed. These early CDNs were the silent workhorses of the Internet, ensuring that websites loaded faster and more reliably, no matter where in the world the user was located.

As the years passed, the digital landscape continued to evolve. Websites transformed from static pages into dynamic, interactive experiences. The rise of the mobile Internet, social media, and video streaming further pushed the boundaries of what CDNs could do. The traditional model of simply caching static content at the network edge began to show its limitations in handling dynamic content and personalized experiences. For instance, static, mostly text-based content only consists of a few kilobytes which is very easy to cache and deliver. Though, as the Internet evolved to more visual content, CDNs had to handle images that consist of 10 megabytes (1000s more data than simple text-based content) and short videos that consist of maybe about 1000 megabytes (i.e., 1 gigabyte), increasing page load times by orders of magnitude. So at this point CDNs became even more important because there was even more demand to cache content closer to the end user, in order to offset the latency of handling more data.

The increase in data was accompanied by the growing demand for dynamic content, such as user-generated content (the comment section of a blog post or product reviews on an e-commerce site), personalized content (personalized product recommendations or personalized newsfeeds), and real-time content (live sport scores updates, or streaming a live sports event). Hence, the demand for real-time data processing and low-latency interactions became more pronounced, highlighting a new set of challenges that traditional CDNs were not equipped to handle.

Enter the era of edge computing, beginning in the latter half of the 2010s. The concept of edge computing emerged as a natural evolution of the CDN model. If CDNs could cache content at the edge of the network, why not also process data there? This idea promised to bring computation closer to the user (e.g., for video streaming), reducing latency and enabling real-time data processing. Companies like NET and FSLY saw this potential early on and began to extend their CDN infrastructure to include edge compute capabilities.

Cloudflare Workers, for example, allowed developers to deploy JavaScript code that runs directly at the edge in NET’s PoPs, closer to the end-users. This shift was not just about speeding up content delivery, it was about enabling entirely new types of applications. Real-time analytics, personalized content, and security measures (e.g., user authentication) could now be implemented at the edge rather than a centralized server, significantly improving the user experience. Developers could build complex logic that executes in milliseconds, providing faster, more responsive applications.

Similarly, Fastly's (FSLY) Compute@Edge (now rebranded to Fastly Compute) introduced a powerful platform for running custom code at the edge. By leveraging WebAssembly (a low-level language that higher-level languages like C++ can compile to; as it is in binary format and hence closer in nature to machine code, it can be executed faster than higher level language code), FSLY enabled developers to write high-performance, secure applications that could be deployed with great scalability across their global network. This marked a significant departure from the traditional CDN role, positioning these companies as key players in the emerging edge compute market.

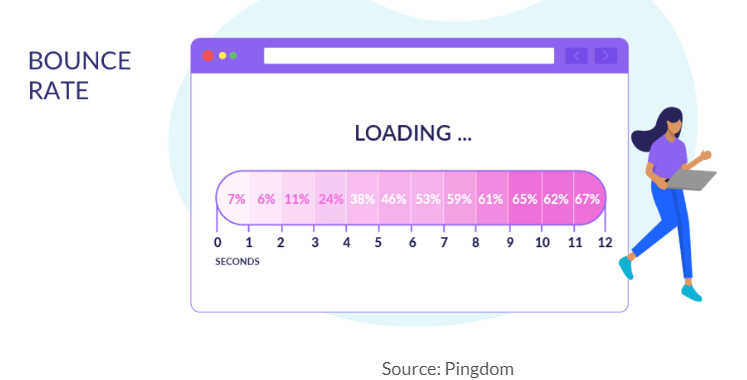

The catalyst behind the demand for next-gen CDNs and edge compute was that website owners need to limit their bounce rate, which is amplified by higher latency. If you can deliver more variety of content (text, image, video, dynamic, etc.) at faster speed then you will lower your bounce rate and make more conversions.

The transition from CDN to CDN + edge compute also opened up new opportunities for innovation. Industries such as gaming, IoT, and augmented reality, which require ultra-low latency and high-speed data processing, began to benefit from these advancements. For instance, online gaming platforms could provide smoother, lag-free experiences by processing game logic at the edge instead of in the cloud. IoT devices could perform local data processing, reducing the need to send vast amounts of data back to centralized servers.

Moreover, the COVID-19 pandemic accelerated the adoption of edge compute technologies. With a dramatic increase in online activities, from remote work and online education to streaming and virtual events, the need for efficient and scalable Internet infrastructure became more critical than ever. Companies that had already invested in edge computing were better positioned to handle the sudden surge in demand, offering reliable and high-performance services to their users.

The appeal to edge compute is also because it is serverless, meaning developers do not need to concern themselves with the configuration of the underlying infrastructure. This makes edge compute highly scalable because applications can rapidly auto-expand the number of hosts subject to increased demand, and vice versa. And developers can focus more on the value-add and differentiation of their application code instead of spending time setting up and provisioning infra resources.

Generally, edge compute will become increasingly instrumental as websites and other online interactions become more digitalized. Though, we are just at the very beginning of this multi-decade trend. As trends such as AI, industrial IoT/OT, digital twins, the metaverse, self-driving vehicles, and so on, converged together, edge compute will play a critical role.

NET vs. AWS

NET has a few competitors in the serverless edge compute space. FSLY is a strong competitor as one of the edge compute pioneers. AWS’ Lambda@Edge is another strong competitor, that is an extension of the cloud-based serverless compute AWS Lambda to the edge, that potentially limits NET’s market potential. Both NET’s and FSLY’s advantage over AWS in edge compute is clearly the network coverage. AWS Lambda is located in a single AWS region for an application, and Lambda@Edge is located globally across AWS data centres. The advantage for Workers and Fastly Compute over Lambda@Edge is that they are located in a higher number of smaller decentralized edge PoPs. This means users are, most of the time, closer to an application running on NET’s or FSLY’s network than one running on Lambda@Edge, thereby delivering lower latency.

The other advantage for NET and FSLY over AWS is that they run applications on V8 isolates and Wasm (WebAssembly Modules) modules, respectively. These are 1000x and 100,000x smaller, respectively, than containers used in cloud environments. And because of this super lightweight nature, NET and FSLY have eliminated the cold start time experienced with containerized applications. A cold start occurs when the code needed to respond to a request has not been used for a while. After such inactivity, the language runtime (e.g., Python, Node.js) needs to re-initialize with its container environment, provision compute (i.e., CPU, memory), and execute the code, which takes time. In contrast, both NET’s V8 isolates and FSLY’s Wasm are designed to start almost instantaneously, as they don't require the heavy initialization of a full container like what is needed in the cloud. This means the runtime and code are ready to execute immediately, reducing the startup latency to virtually zero and providing faster responses to incoming requests.

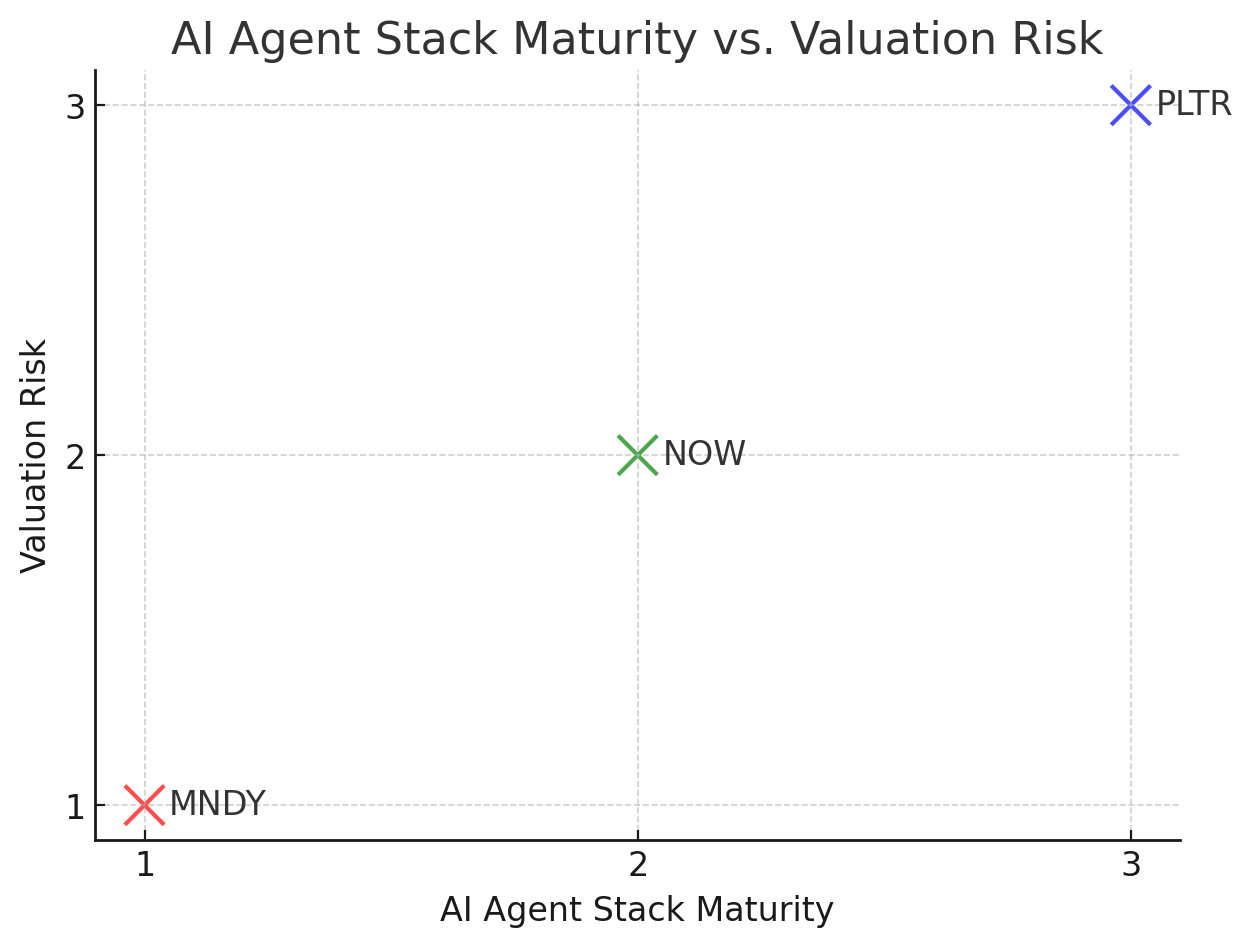

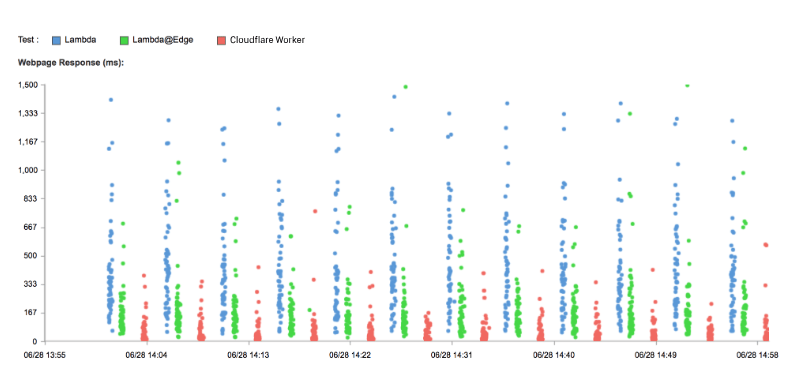

The collective latency reductions from a more dispersed global PoP coverage and faster runtime initialization for NET vs. AWS Lambda and Lambda@Edge is shown in the following chart.

Despite NET’s and FSLY’s advantages over AWS, we are surprised they have not achieved greater momentum among developers, since we began covering them in early 2021. With hindsight, we attribute the slower-than-anticipated adoption to certain drawbacks in using Cloudflare Workers and Fastly Compute. The Workers platform, for example, historically supported only JavaScript, TypeScript, and WebAssembly, natively. This was a limitation for developers who wanted to use other languages like Python or Java. Well, in fact, Python and Java can be compiled to WebAssembly, like many other languages such as Rust and C++, though the projects doing the conversion (like Pyodide and MicroPython) are less mature, thus creating friction for Python and Java developers. NET has very recently introduced Python natively (made it GA in April 2024), which is a big milestone that should ramp up the developer adoption rates, though the limited native language support has been a headwind to date.

In contrast, Lambda@Edge has natively supported a wide range of languages for a long time, including Python and Java, without the need for any conversion. This means developers can use these languages directly and leverage their full ecosystems and libraries without modification and concerning themselves with WebAssembly compilation. And this difference in language support between Lambda and Workers illuminates the difference in strategic goals. AWS is focused on user experience and being the most accommodative platform for developers, while NET has been so relentlessly focused on pushing the boundaries, such as eliminating the cold starts, that they needed to keep the number of languages natively supported manageable. Though, the former strategy has certainly resulted in more success to date, as Lambda@Edge is running multiple times more workloads.